Thanks to the folks at PrimeSense libraries are now available for skeleton tracking and gesture recognition.

UPDATE: Check

here if you've gotten NITE working and want to try using the kinect as a Minority Report style mouse.

UPDATE:I've added a description of how to track multiple hands under the Sample-PointViewer description.

Here's how I got things working in gentoo:

(1)

mkdir ~/kinect && cd ~/kinect(2)

git clone https://github.com/OpenNI/OpenNI.git (3)

cd OpenNI/Platform/Linux-x86/Build(4)

make && sudo make install(5)

cd ~/kinect/ (6)

git clone https://github.com/boilerbots/Sensor.git(7)

cd Sensor(8)

git checkout kinect(9)

cd Platform/Linux-x86/Build(10)

make && sudo make install(11) go to this page at openNI to download the latest NITE release for your platform:

NITE download page or for the impatient:

32-bit64-bitUPDATE: download links now point to openNI and should work again

(12)Save the NITE tarball to ~/kinect and untar it

(13)

cd ~/kinect/NITE/Nite-1.3.0.17/Data (14)Open Sample-User.xml and replace the existing License line with the line below:

NOTE: this is case sensitive! < License vendor="PrimeSense" key="0KOIk2JeIBYClPWVnMoRKn5cdY4="/>

(15)Repeat step 14 for Sample-Scene.xml and Sample-Tracking.xml

(16)Open Sample-User.xml and replace the existing MapOutputMode line with the line below.

NOTE: this is case sensitive! < MapOutputMode xRes="640" yRes="480" FPS="30"/>

(17)Repeat step 16 for Sample-Scene.xml and Sample-Tracking.xml

(18)

niLicense PrimeSense 0KOIk2JeIBYClPWVnMoRKn5cdY4=(19)

cd ~/kinect/NITE/Nite-1.3.0.17/(20)

sudo ./install.bash(21)

make && sudo make install(22)

cd ~/kinect/NITE/Nite-1.3.0.17/Samples/BinNow finally you should be sitting in a directory with all the sample binaries that you can play with. Here's what they should look like:

Sample-TrackPad:This app will track your hand and show it's relative position on a grid. Run it and wave your hand,one of the squares on the grid should turn yellow to indicate your hand's location as seen below:

you should also get some debug output in your console:

Sample-Players

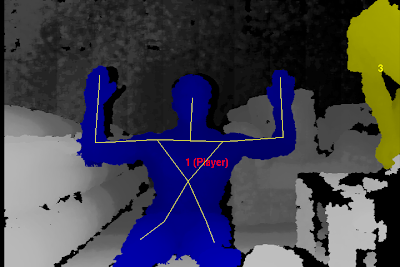

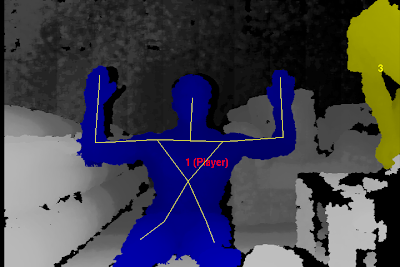

Sample-PlayersThis app demonstrates the skeletal tracking. After starting it up, move around or wave until your body changes to blue (subsequent players will be other colors, e.g. player 2 is green, 3 yellow,etc.). At this point your viewer window should look vaguely like this:

and you should see something like this in your console:

Look for pose

Found pose "Psi" for user 1

Now, hold your arms out to your sides bent 90 degrees at the elbows as shown below until a skeleton is overlayed on the image of your body:

At this point something like this should have appeared in your console:

Calibration started

Calibration done [1] successfully

Writing 217.596 50 50 78.4388 64.6762

Matching for existing calibration

Read 217.596 50 50 78.4388 64.6762

This seems to do some sort of gesture recognition and dynamically adjusts the camera resolution, so it's probably zooming in on an area of interest. When it starts out it asks you to perform a focus gesture. The NITE documentation doesn't seem to define what this would be but simply sticking one hand out in front of you seems to make it happy and you'll see the following output:

SamplePointViewer

SamplePointViewerThis app does handtracking.

UPDATE: to allow multiple hands to be tracked you will need to edit /usr/etc/primesense/XnVHandGenerator/Nite.ini by uncommenting the two config parameters it contains. Basically remove the semicolons at the start of each line so that Nite.ini looks like this:

[HandTrackerManager]

AllowMultipleHands=1

TrackAdditionalHands=1

To persistently track different hands in your code you can make use of the XnVHandPointContext.nID in your OnPointUpdate callback.

Sample-Boxes

Sample-BoxesThis example allows you to click one of three boxes, your hand motion is tracked by a slider and depending on the context, up, left, right gestures will be recognized.

Sample-CircleControl

Sample-CircleControlWave to make the border of the window turn green. Then I think you need to send a focus gesture and then if you trace out a circle in the air with your hand the onscreen circle will follow your hand as seen below. In other words if you draw a clockwise circle in the air, the clock hand will also spin clockwise and vice versa. For some reason, this appears to be annoyingly inconsistent.

Sample-SceneAnalysis

Sample-SceneAnalysisThis seems to just do player detection without skeleton tracking: